Assignment 5 Report

Introduction / Overview

We recognized that it is challenging to try to imagine how a product would look to us when doing online shopping, which can be usually done in conventional shopping. Eventually, this is one of the reasons why customers easily regret their choices. Our application is designed to address this problem with virtual models and interaction interfaces, especially in eyeglasses purchases.

We have updated some functions such as saving the current choice and adjusting the model’s position so that it shows our application’s significance more clearly than before. Plus, we have expanded the range of eyeglasses to five models.

Technical Development

First of all, to realise our design in augmented reality, we chose to use Unity and Vuforia Engine. Unity allowed us to deal with the objects straightforwardly, positioning them and applying some functions to them with scripts. The Vuforia Engine is a foundational AR interface tool on Unity, which performed target detection and model augmentation on the target in our application.

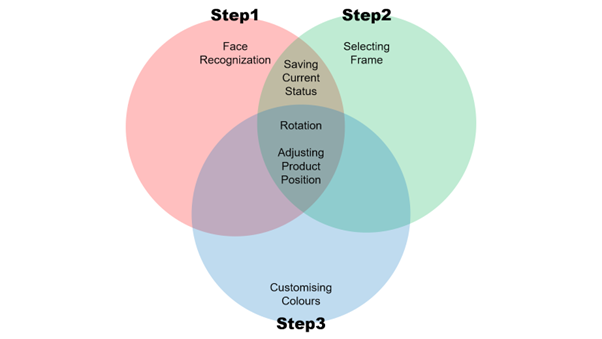

When it comes to the interface, we divided the process of ordering into three steps and implemented as many options for each step as we can so that the users would not be confused about the new feature and make their various choices.

Step1. Face Recognition

When the users put their picture of themselves onto the camera, the application will catch users' facial appearance and make a 3D model of it. We used a Unity asset named “Avatar Maker” for this process since it showed quite accurate performance for making the model. In the future development, we wish to adopt a technique that directly recognizes users' faces via camera so that users do not need to prepare their pictures.

Step2. Selecting Frame

After the 3D model has been successfully made and displayed, the next step is frame selection. This section lets the user select and try out different shapes of glasses with <next> and <previous> buttons. To make this work, we applied the script that consists of an array to store the type of glasses and a function that changes the type according to the chosen button. When the button is clicked, the script will change the index of the glasses apparently, and the previous glasses will be off and new glasses will be on.

// get the index of the glasses that currently on

if (glasses[i].activeInHierarchy)

{

current = i;

}

// change the model

if (current == 0)

{

glasses[0].SetActive(false);

}

else if (current > 0)

{

glasses[current].SetActive(false);

glasses[current - 1].SetActive(true);

}

Step3. Customising Colours

Once the user has a firm choice in the frame’s shape, the user can now continue to the next step, which is colour selection. In this section, the user will further customise their own eyewear, besides the frame selection, using a drop-down menu. To implement this function, firstly we needed to seek the eyeglasses model so that the lens and frame could be separated from each other. Since all parts of the glasses were put together in the parent folder, we used the getChild function to access them and used the material function to change the colour of each part.

// get the children of the parent and store them in the child array

for (int i = 0; i < children; i++)

{

child[i] = parent.transform.GetChild(i).gameObject;

}

// change the colour of the part until the index gets 5 (last object of the frame part)

for (int i = 0; i < 5; i++)

{

child[i].GetComponent<Renderer>().material = colors[1];

}

// change the colour of the part for the rest parts (only lens parts are left)

for (int i = 5; i < children; i++)

{

child[i].GetComponent<Renderer>().material = colors[1];

}

The following techniques used in common are could be the main significance of our application that shows users the objective point of view of themselves.

Both in step1 and step2, we provide a “save” button that shows up an additional 3D face. It stores the current face with the selected item in order to facilitate the comparison. To clone the face, we used Instantiate function and adjust the clone’s size and location to fit the screen canvas. Also, we used destroy function to prevent infinite duplication of the face.

// if the number of clone is more than one, destroy the previous clone

if (count > 0)

{

Destroy(clone);

}

// cloning and put it on the screen canvas

clone = Instantiate(myObject);

clone.transform.SetParent(myCanvs.transform);

clone.transform.localScale = new Vector3(800, 800, 800);

clone.transform.localPosition = new Vector3(-300, 0, 0);

clone.transform.localRotation = Quaternion.Euler(0, 0, 0);

In all steps, users can adjust the position of the glasses on their face with the virtual buttons around the face and notice how the look is going through the rotation feature.

In the virtual buttons, we used an already built-in function in the Vuforia asset to put them onto the image target and detect the finger push. Then we linked the glasses with the script using the localPosition function to move in a specific direction.

// move the glasses

glasses[current].transform.localPosition = glasses[current].transform.localPosition + new Vector3(0, -0.005f * Time.deltaTime, 0);

In the rotation feature, we used a slide for the user interface to represent the current degree of rotation straightforwardly. As the user drag, the number on the slide will be changed, and the face will be rotated apparently.

// as the slider changes, the number will be changed

mySlider.onValueChanged.AddListener((v) =>

{

myText.text = v.ToString("0");

});

// and following function will be conducted

myObject.transform.localEulerAngles = new Vector3(0, mySlider.value, 0);

3D Content

The 3D contents that consist of the interface solution are a face model, which represents the user's face and a few different models of eyeglasses shapes as varieties of choices tend to be desired by customers, especially when it comes to choosing an eyewear.

Firstly, a 3D face model is used to represent the user's face, which is essential to help the user to visualise themselves wearing the selected model as they are customising their pair of glasses. The feature of face recognition in the existing AR application by Warby Parker is the equivalent to the recognition of the face model's image target. The image target used in the interface solution is meant to be used as a demo, however, in the intended version can recognise photos (used as image target) of the customer themselves. Therefore, the 3D model of a face model also acts as a "mirror" when they are customising the products.

3D models of eyeglasses are used as one of the main points in the interface solution, as we have selected eyeglasses as the main fashion items because people tend to be more careful when purchasing eyewear and due to its long-term use. A few different 3D models of eyeglasses are used in the interface solution as mentioned due to high demand of varieties as customers tend to be thorough when purchasing an eyewear, compared to other fashion items, such as shirts or jackets. Thus, different frame shapes, ranging from square, round and cat-eye frames. When the user’s undergo the process of customising the eyeglasses, it is seen that there are two models of square-shaped glasses frames; the reason for that is to showcase the function of the “save button” when pressed as when the user is selecting between two similar shaped models, it would be harder to notice the difference, thus, this is where the “save button” came in handy to compare the current and previous selection of glasses. As for the other shapes, besides providing more variety to choose from, including round-shaped, cat eye-shaped and sport models can also meet the demand of trending frame shapes, therefore, the customers will have greater satisfaction as they are not limited to a certain number of choices.

Usability Testing

Scope

Our team will be able to discover both quantitative and qualitative data insights by using usability testing. After that, we can identify the users' main points in the overall application schema and what users think as they engage with the app thanks to the insights. The team will be able to see ways to enhance the application's interface by utilising these insights.

Six testing participants were recruited over the range of young adults for the testing and have been providing a lot of information, negatives, and positives about the interface application which we will use to improve the current version of the application.

Design and plan

Our questionnaire can be distinguished by the intention of each question.

The survey begins with two foundational questions, which ask about participants’ experience related to the interface problem that the existing application has as seen in Picture 1. These questions will help us perceive what the users’ current experience with online shopping and how they usually experience the aftermath of buying a new product via an e-commerce company. The data gathered from the foundational questions are crucial as it helps to determine how reasonable the topic of the interface solution is.

For the next, there was a set of quantitative questions regarding how easy the interface solution is and how useful the application was. Thus, we broke down each topic into one and three questions respectively in terms of the features we have.

Lastly, We prepared three qualitative questions which required short answers. The aim of each question was clear.

We had noticed there was already an existing application used by “Warby Parker” that works similarly to ours. Nonetheless, we thought that the difference between how the mirror feature looks will make users have different preferences, which was the intended aim of the first question.

For the last two questions, we wished to get some requirements that the user personally want to add and general feedback for future development.

Addressing the Results of the Usability Testing

Tester

- Download the app through the link: https://www.dropbox.com/s/lvl07tr2ejz8wvp/assignment44.zip?dl=0

- Has a Laptop or PC with webcam

- Study KIT208 or has a minimum understanding of AR

- Like online shopping

Given Scenario

GlassesAR is a glasses online shopping app that helps users have an easier time choosing a suitable pair of glasses right online without having to go to the store. The user wants to buy a pair of glasses on this app.

Tasks

Step 1. Face recognition

- When you put your picture on the camera, the application will recognize your face and create a 3D model of it

- With the result, we have done so far, let us just assume that the given picture is you.

Step 2. Selecting the frame of glasses

- Use the interface on the screen with a mouse

- We have buttons that are functioning for the previous model, and the next model, saving the current status.

- You also can adjust the position of the glasses with the virtual buttons on each side of your face

Step 3. Customization

- Customize your own glasses by changing colours

- You can use the save button to notice the differences easily.

Step 4. The end

- Lastly check what you have chosen and order it

Findings table

Analysis on findings

Topic1:

The survey shows that 75 percent of the participants answered positively about the questions related to the topic, which means the current interface seems to need some solutions and our choice for the interface problem was fairly successful.

Topic2:

It shows 100 percent of the participants repeated that the given application was easy to use. This means our method which follows in a similar way as the current interface is now using makes users not confused by the new features.

Topic3:

For the questions asking about how useful the application was, 100 percent of the participants agreed our application could be a solution for the suggested interface problem.

The result from the survey that asked about the participants’ preference between Warby Parker’s application and ours was interesting.

More than half participants chose to use Warby Parker’s application. Most of the reasons for the negative answers came from the reality of the figure. Consequently, putting on the glasses on the 3D model of our face could not make users sure of the purchase even though more customizable features are given.

However, most of the answers agreed that the strength which makes them able to look at themselves from all aspects of view. Therefore, We concluded that some needs for our application still exist, and if we can adopt more realistic models for the face and glasses, we can get more preferences from users.

Based on the findings and opinions from the tester, the app still has a lot to improve on. Our team will try to implement all these findings and construct a plan to work on and improve the app. Utilising these insights into the app, we as the app developer will polish the app based on the given feedback to perfect it before releasing the first version.

Plan for improvement

Issues | Recommendations | Difficulties level |

Simplistic interface | Polish the interface with better design button and text | Medium |

Missing back button | Adding back button to step 2 3 4 5 | Easy |

Limited products and frame | Adding a wider range of glasses and frame | Medium |

Bug in the app | Polishing the script | Medium |

Missing cart feature | Adding cart feature in future version | Hard |

Missing sunglasses accessories feature | In the meantime this feature is not a priority for development | Hard |

Absent on nature light (in-depth and realistic view) | Will implement this feature using natural light in Unity | Hard |

Missing home interface | Adding a home interface in the release version | Medium |

Realistic issue | This issue is very hard to fix and will need | Hard |

Program | Feature | Interface Design |

- Fixing any bug in the app - Add natural lightning | - Add back button - Add a wider range of glasses and frames - Add a cart feature | - Polish the interface with better design button and text - Add a home interface for the app |

References

Used techniques

- Vuforia engine

https://developer.vuforia.com/

- Avatar Maker Free - 3D avatar from a single selfie (itSeez3D Inc)

- Google form

https://docs.google.com/forms/u/0/

Glasses references

Shape 1: Square-shaped glasses 1 (https://www.cgtrader.com/free-3d-models/household/other/glasses-87a0e281-6f4c-414b-82ed-758635e01365)

Shape 2: Square-shaped glasses 2 (https://free3d.com/3d-model/glasses-56919.html)

Shape 3: Round-shaped glasses (https://www.turbosquid.com/ko/3d-models/glasses-fashion-3d-model-1603673)

Shape 4: Cat eye-shaped glasses (https://sketchfab.com/3d-models/cat-eye-glasses-a9e9aef9d630407f9956eca64d54719e)

Shape 5: Sport glasses (https://www.cgtrader.com/items/313750/download-page )

Report references

Warby Parker AR video: https://www.youtube.com/watch?v=HoSjmiVLsLU

Picture for the target image: https://www.faceapp.com/

Leave a comment

Log in with itch.io to leave a comment.